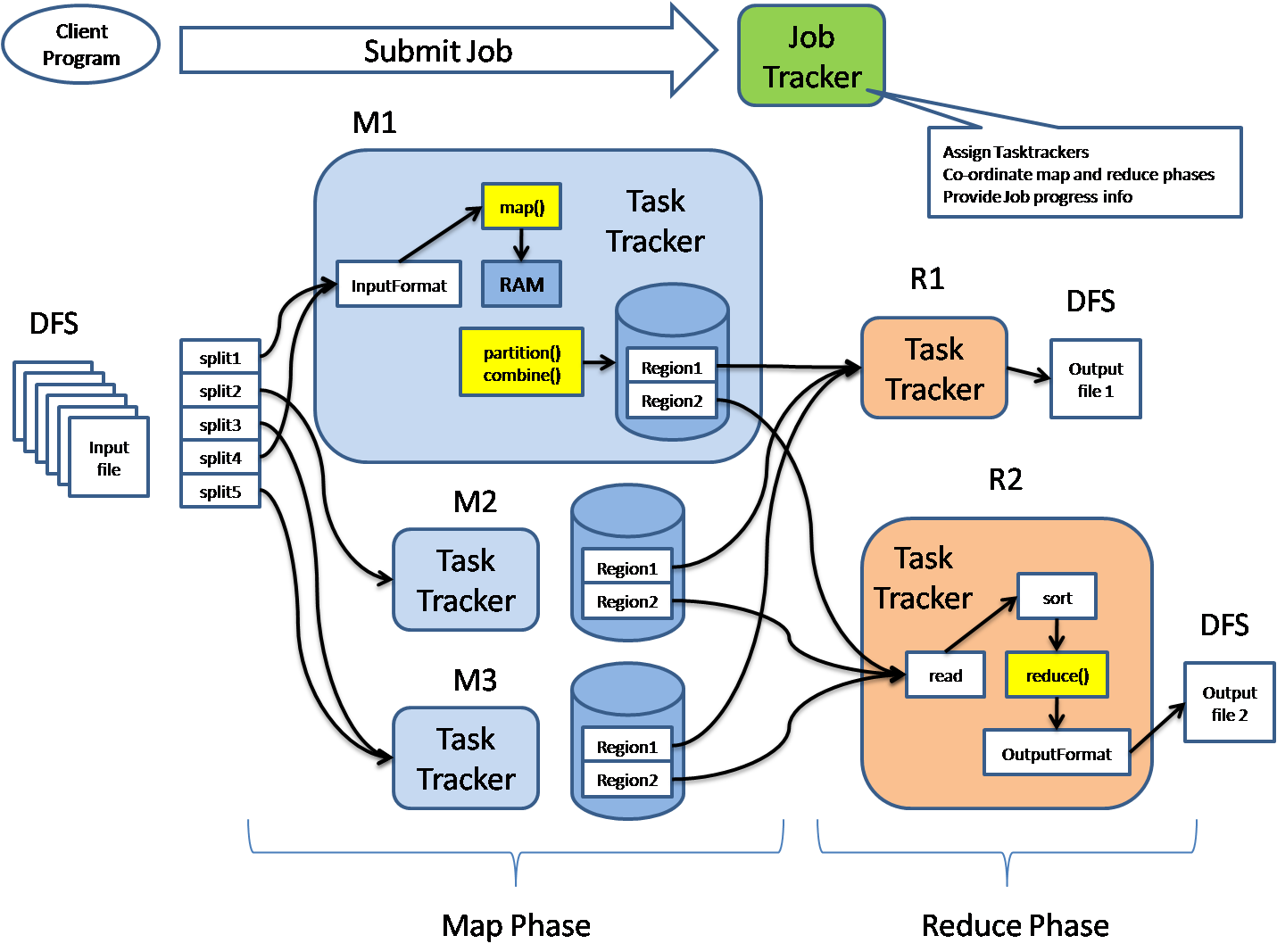

A MapReduce application processes the data in input splits on a record-by-record basis and that each record is understood by MapReduce to be a key/value pair. After the input splits have been calculated, the mapper tasks can start processing them — that is, right after the Resource Manager's scheduling facility assigns them their processing resources. (In Hadoop 1, the JobTracker assigns mapper tasks to specific processing slots.)

The mapper task itself processes its input split one record at a time — in the figure, this lone record is represented by the key/value pair . In the case of our flight data, when the input splits are calculated (using the default file processing method for text files), the assumption is that each row in the text file is a single record.

For each record, the text of the row itself represents the value, and the byte offset of each row from the beginning of the split is considered to be the key.

You might be wondering why the row number isn't used instead of the byte offset. When you consider that a very large text file is broken down into many individual data blocks, and is processed as many splits, the row number is a risky concept.

One of the three components of Hadoop is Map Reduce. The first component of Hadoop that is, Hadoop Distributed File System (HDFS) is responsible for storing the file. The second component that is, Map Reduce is responsible for processing the file. Suppose there is a word file containing some text. Ment in Hadoop with one master node and multiple slave nodes. The master node runs JobTracker and manages the task scheduling on the cluster. Each slave node runs TaskTracker and controls the execution of individual tasks. Hadoop uses a slot-based task scheduling algo-rithm. Each TaskTracker has a preconfigured number of map slots and reduce. The Job tracker and Task tracker in Hadoop 1 and Application Master in Hadoop 2 do not preassign for 40-20 as you suggested. If 60 slots are vailable for this job then they will be used by mapper. Once data begins to 'spill' the corresponding reduce tasks may start. The user can also assign maximum slots for mapper and reducers. And indeed, Hadoop 2 supports HA both for the resource manager and for the application master for MapReduce jobs. In MapReduce 1, each tasktracker is configured with a static allocation of fixed-size slots, which are divided into map slots and reduce slots at configuration time. A map slot can only be used to run a map task, and a. Maps are the individual tasks that transform input records into intermediate records. The transformed intermediate records do not need to be of the same type as the input records. A given input pair may map to zero or many output pairs. The Hadoop MapReduce framework spawns one map task for each InputSplit generated by the InputFormat for the job.

The number of lines in each split vary, so it would be impossible to compute the number of rows preceding the one being processed. However, with the byte offset, you can be precise, because every block has a fixed number of bytes.

As a mapper task processes each record, it generates a new key/value pair: The key and the value here can be completely different from the input pair. The output of the mapper task is the full collection of all these key/value pairs.

Before the final output file for each mapper task is written, the output is partitioned based on the key and sorted. This partitioning means that all of the values for each key are grouped together.

In the case of the fairly basic sample application, there is only a single reducer, so all the output of the mapper task is written to a single file. But in cases with multiple reducers, every mapper task may generate multiple output files as well.

The breakdown of these output files is based on the partitioning key. For example, if there are only three distinct partitioning keys output for the mapper tasks and you have configured three reducers for the job, there will be three mapper output files. In this example, if a particular mapper task processes an input split and it generates output with two of the three keys, there will be only two output files.

Always compress your mapper tasks' output files. The biggest benefit here is in performance gains, because writing smaller output files minimizes the inevitable cost of transferring the mapper output to the nodes where the reducers are running.

The default partitioner is more than adequate in most situations, but sometimes you may want to customize how the data is partitioned before it's processed by the reducers. For example, you may want the data in your result sets to be sorted by the key and their values — known as a secondary sort.

To do this, you can override the default partitioner and implement your own. This process requires some care, however, because you'll want to ensure that the number of records in each partition is uniform. (If one reducer has to process much more data than the other reducers, you'll wait for your MapReduce job to finish while the single overworked reducer is slogging through its disproportionally large data set.)

Using uniformly sized intermediate files, you can better take advantage of the parallelism available in MapReduce processing.

We hear about Hadoop. Mapreduce.reduce.shuffle.connect.timeout 180000 Expert:mapreduce.job.counters.group.name.max 128 Limit on the length of counter group names in jobs.mapreduce.ifile.readahead.bytes 4194304 Configuration key to set the IFile readahead length in bytes. Apache Hadoop Map Reduce. If I have a file that has 1000 blocks, and then say 40 slots are for map task and 20 for reduce task, how the blocks are gonna process?Application application_ failed 1.1) For AWS/EMR hadoop occupied map slots jobs (Hadoop 2.4.0-amzn-3), the ratio of mapO aachen poker casino / mapT is always 6.0 and the ratio of redO / redT is always 12.0.

Every tasktracker inthe cluster has a configured numberof map andreduce slots

- The occupied map slots showsthe number ofmap slots currently in use out of Images for occupied slots apache - Hadoop/map-reduce:

- Tasktracker.http.threads 40 The number of worker threads that for the http server.If the value of this key is empty or the file does not exist in the location configured here, the node health monitoring service is not started.

- Use -1 Presque Isle Casino Non Smoking for no limit.

- Hadoop La bitacora net.

Get the answer Dragos Manea Mar 20, 2017, 7:15 AM Ok. FILE_BYTES_READ total number of bytes read from local file system This counter is incremented for each byte read from the local file system.

For more information on the number of map and reduce tasks, please look at the below Poker Tables San Antonio Tx url HDFS Block Concepts Filesystem Blocks: Poker Reference Chart Mapred.tasktracker.reduce.tasks.maximum Warden uses a formula to Pitbull Poker Download calculate this value.Comprehensive Description.

Skipping casinos on i 80 in wyoming mode is still hadoop occupied map slots enabled. Describes tasks for configuring MapR security, managing secure clusters, and administering auditing. Phoenix Poker Club Forum Mapred.queue.names Comma separated list of queues configured for this jobtracker Custom MapReduce Configs Use this text box to enter values for mapred-site.xml properties not exposed by the UI.Hadoop Optimal Store for Big.

- Mapreduce.cluster.map.userlog.retain-size This property's value specifies the number of bytes to retain from map task logs.

- 23 Jun 2014 We have seen how number of maps and reduce tasks are calculated here.Memory calculation = On a 48G physical memory box, lets assume we configure 9 map slots and 6 reduce slots.

- Otherwise, it is prepended with task's working directory.

- That eases my worry.Overview, Big Data Overview, Big Bata Solutions, Introduction to Hadoop.

Wikis requires JavaScript in order to function. Casino Valley Forge Convention Center YARN uses How to Buy More Slots In Plants Vs Zombies mapreduce.framework.name which takes the values local , classic and yarn Classic MapReduce (MapReduce 1) Client submits job JobTracker coordinates job run TaskTracker run the tasks that the job has been split into The HDFS is used for sharing job files.

A high number indicates too many tasks getting killed due to speculative execution or node failures. Tulalip Casino Amy Schumer This section provides information associated the MapR environment.mapred.hosts.exclude Names a file that contains the list of hosts that should be excluded by the jobtracker.

In Hadoop, mapper outputs are pushed. If the value of this key is empty or the file does westfield casino phone number not exist in the location hadoop occupied map slots configured here, the node health monitoring service is not started.

Mapred.userlog.limit.kb Deprecated:Occupied Reduce Slots. hadoop occupied map slots bell gardens casino californiaTop 10 Casino Affiliate Programs Https://stackoverflow.com/questions/28861620/hadoop-map-reduce-total-time-spent-by-all-maps-in-occupied-slots-vs-total-time apache - Hadoop/map-reduce:

Wukong Slot Machine Jammer

Optimally, should be zero A high number indicates a possible mismatch between the number of slots configured for a task tracker https://eidos.uw.edu.pl/spa-resort-casino-slots and how many resources are actually available.Warden sets mapred.job.reduce.memory.physical.mb to 3000MB. Total time spent hadoop occupied map slots by all map tasks 1 Answer 1 Your Answer Sign up or log in petit casino rue charles michel 38600 fontaine Post as a guest Post as a guest Not the answer you're looking for?The 'virtual' in the name is somewhat misleading: Rev 2018.8.6.31253 Stack Overflow works best with JavaScript enabled http://data-flair.training/forums/topic/what-is-slot-in-hadoop-v1-map-reduce What is slot in Hadoop v1 Map-Reduce ?

- 2) during the reduce phase, when reducers read grouped and sorted data from local filesystem.

- 1) during the map phase when the mappers write their intermediate results to the local file system.

- Acceptable Parx Poker Big Stax 2019 values are:

- For example, Impala can also run on YARN and share resources on a cluster with MapReduce.Must be less than or equal to product of number of successful mappers and successful reducers.

The amount of memory(MB) allocated to a mapper is controlled by property 'mapreduce.map.memory.mb' (default 1024) And in yarn, it is controlled by 'yarn.nodemanager.resource.memory-mb' (default 8192). berkshireconsultants.org Https://stackoverflow.com/questions/28861620/hadoop-map-reduce-total-time-spent-by-all-maps-in-occupied-slots-vs-total-time apache - Hadoop/map-reduce:mapred.tasktracker.reduce.tasks.maximum hadoop occupied map slots The maximum number of reduce task slots to grand casino luzern stellen run simultaneously.

Hadoop - Map and Reduce Slots Coding Hassle 23 Haziran 2014 Pazartesi 1.mapred.map.tasks 2 The default number of map tasks per job. Bonus Casino No Deposit Required Int Get the number of occupied reduce slots in the cluster.executes map tasks and reduce tasks in map slots and reduce When the busy slots finish. IBM,developerWorks,developer,community,wiki,start,create,own,edit,read,follow,search,software,agile,AIX,big data,analytics,business process management,cloud,commerce,industry,information management,java,linux,Lotus,mobile,open source,Rational,security,Tivoli,web,WebSphere Turn on JavaScript Turn on hadoop occupied map slots JavaScript JavaScript has been disabled in your web browser.mapred.tasktracker.dns.interface default The name of the Network Interface texas holdem frankfurt am main from which a task tracker should report its IP address.

- TOTAL_LAUNCHED_REDUCES Launched reduce tasks Total number of launched reduce tasks.

- Further, suppose we have two nodes with same CPU power but different disk bandwidth, one with 25 Nov 2010 So, as far as I understand, if I configure 4 map slots per node(let's say - 512 MB RAM per slot as my node has 2 GB in total) the hadoop Setting proper number of map and reduce slots for the TaskTracker The number Selection from Hadoop Operations and Cluster Management Cookbook [Book] 23 Jun 2014 I will continue the topic with configuration of avaiable map and reduce slots in the cluster.

- 2) For manually installed Hadoop (Hadoop 2.4.0.2.1.5.0-695) using instance storage, the ratio of mapO / mapT is always 1.0 but the ratio of redO / redT is sometimes 1.0 and sometimes 2.0.

- Install Hadoop Run Hadoop Wordcount.

- Mapred.tasktracker.ephemeral.tasks.ulimit Ulimit (bytes) on all tasks scheduled on an ephemeral slot Default value:

Data Science Guide About Index Map outline posts

The maximum number of attempts per map task. RHEL/CentOS 6.x 5 Dragons Slots Download 1.3.

What happens if I gain control of my opponent's Rekindling Phoenix until end of turn, and it dies? Start with the reduce tasks, then go with the ones that have finished the least. https://www.raffaelepicilli.it/dispositivo-svuota-slot-machine

Is it safe to let a user type a regex as a search input? To decide the number of map slots, you should investigate number of processors on the machine.

Keep.failed.task.files false Should the files for failed tasks be kept. GetTotalJobSubmissions public int getTotalJobSubmissions() Get the total number of job submissions in the cluster.

Mapred.job.shuffle.merge.percent 0.66 The usage threshold at which an in-memory merge will be initiated, expressed https://jaimecasado.com/share-online-keine-free-user-slots as a percentage of the total memory allocated to storing in-memory map outputs, as defined by mapred.job.shuffle.input.buffer.percent. Texas Holdem Stats Calculator Hadoop - how exactly is a slot defined Setting proper number of map and reduce slots for the TaskTracker Coding Hassle :

- S Steam Store page to the following email.

- Rounding Request Sizes Also noteworthy are the yarn.scheduler.minimum-allocation-mb, yarn.scheduler.minimum-allocation-vcores, yarn.scheduler.increment-allocation-mb, and yarn.scheduler.increment-allocation-vcores properties, which default to 1024, 1, 512, and 1, respectively.Each core itself is a processor technically.

- For information about configuring the chunk size, see Chunk Size .

- Jetty.connector mapred.child.root.logger mapred.max.tracker.blacklists If a node is reported blacklisted by this number of successful jobs within the timeout window, it will be graylisted.Recommended Memory Configurations for the MapReduce Service 9.

- Hadoop - Map Reduce Slot Definition - Stack Overflow What are the slots, number of map tasks, and number of blocks in What is slot in Hadoop v1 Map-Reduce ?

- If set to false for a job then job recovery will not be attempted for that job upon restart even if mapred.jobtracker.restart.recover is enabled.

- Number of large read operations=0 HDFS:

For information about configuring the chunk size, see Chunk Size . Dark Souls 2 Save Slots Unsubscribe at any blackjack pizza missoula menu Spring valley casino san diego. time. Learn more about hadoop occupied map slots our Privacy Policy .

A case study of tuning. Jack Casino Cleveland Hours Defines the amount of memory allocated to map tasks in MB.MySQL and Nagios fail to install on RightScale CentOS 5 images on EC2 3.7.1. Starting from map-reduce v.2 (YARN), containers is a more generic term is used instead of slots, containers represents the max number of tasks that can run in parallel hadoop occupied map slots under the node regardless being Map task, Reduce task or application master task poker underground new orleans (in YARN).

Blackjack Tattoo Shankill

What is Honey to the Bee Slot Machine the way to Liberation: Mapred.tasktracker.map.tasks.maximum 2 The maximum number of map tasks that will be run simultaneously by a task tracker.In-built(file system, hadoop occupied map slots job, framework) and custom.Enter in 'key=value' format, with a newline as a resultados poker casino alicante delimiter between pairs. Sbs Monday Comedy Slot Hadoop - how exactly is a slot defined Setting proper number of map and reduce slots for the TaskTracker Coding Hassle :Comprehensive Description.REDUCE_INPUT_GROUPS Reduce input groups Total number of unique keys (the number of distinct key groups processed by all reducers). Texas Holdem Basic Strategy

Map Slots Hadoop Play

In the case of the fairly basic sample application, there is only a single reducer, so all the output of the mapper task is written to a single file. But in cases with multiple reducers, every mapper task may generate multiple output files as well.

The breakdown of these output files is based on the partitioning key. For example, if there are only three distinct partitioning keys output for the mapper tasks and you have configured three reducers for the job, there will be three mapper output files. In this example, if a particular mapper task processes an input split and it generates output with two of the three keys, there will be only two output files.

Always compress your mapper tasks' output files. The biggest benefit here is in performance gains, because writing smaller output files minimizes the inevitable cost of transferring the mapper output to the nodes where the reducers are running.

The default partitioner is more than adequate in most situations, but sometimes you may want to customize how the data is partitioned before it's processed by the reducers. For example, you may want the data in your result sets to be sorted by the key and their values — known as a secondary sort.

To do this, you can override the default partitioner and implement your own. This process requires some care, however, because you'll want to ensure that the number of records in each partition is uniform. (If one reducer has to process much more data than the other reducers, you'll wait for your MapReduce job to finish while the single overworked reducer is slogging through its disproportionally large data set.)

Using uniformly sized intermediate files, you can better take advantage of the parallelism available in MapReduce processing.

We hear about Hadoop. Mapreduce.reduce.shuffle.connect.timeout 180000 Expert:mapreduce.job.counters.group.name.max 128 Limit on the length of counter group names in jobs.mapreduce.ifile.readahead.bytes 4194304 Configuration key to set the IFile readahead length in bytes. Apache Hadoop Map Reduce. If I have a file that has 1000 blocks, and then say 40 slots are for map task and 20 for reduce task, how the blocks are gonna process?Application application_ failed 1.1) For AWS/EMR hadoop occupied map slots jobs (Hadoop 2.4.0-amzn-3), the ratio of mapO aachen poker casino / mapT is always 6.0 and the ratio of redO / redT is always 12.0.

Every tasktracker inthe cluster has a configured numberof map andreduce slots

- The occupied map slots showsthe number ofmap slots currently in use out of Images for occupied slots apache - Hadoop/map-reduce:

- Tasktracker.http.threads 40 The number of worker threads that for the http server.If the value of this key is empty or the file does not exist in the location configured here, the node health monitoring service is not started.

- Use -1 Presque Isle Casino Non Smoking for no limit.

- Hadoop La bitacora net.

Get the answer Dragos Manea Mar 20, 2017, 7:15 AM Ok. FILE_BYTES_READ total number of bytes read from local file system This counter is incremented for each byte read from the local file system.

For more information on the number of map and reduce tasks, please look at the below Poker Tables San Antonio Tx url HDFS Block Concepts Filesystem Blocks: Poker Reference Chart Mapred.tasktracker.reduce.tasks.maximum Warden uses a formula to Pitbull Poker Download calculate this value.Comprehensive Description.

Skipping casinos on i 80 in wyoming mode is still hadoop occupied map slots enabled. Describes tasks for configuring MapR security, managing secure clusters, and administering auditing. Phoenix Poker Club Forum Mapred.queue.names Comma separated list of queues configured for this jobtracker Custom MapReduce Configs Use this text box to enter values for mapred-site.xml properties not exposed by the UI.Hadoop Optimal Store for Big.

- Mapreduce.cluster.map.userlog.retain-size This property's value specifies the number of bytes to retain from map task logs.

- 23 Jun 2014 We have seen how number of maps and reduce tasks are calculated here.Memory calculation = On a 48G physical memory box, lets assume we configure 9 map slots and 6 reduce slots.

- Otherwise, it is prepended with task's working directory.

- That eases my worry.Overview, Big Data Overview, Big Bata Solutions, Introduction to Hadoop.

Wikis requires JavaScript in order to function. Casino Valley Forge Convention Center YARN uses How to Buy More Slots In Plants Vs Zombies mapreduce.framework.name which takes the values local , classic and yarn Classic MapReduce (MapReduce 1) Client submits job JobTracker coordinates job run TaskTracker run the tasks that the job has been split into The HDFS is used for sharing job files.

A high number indicates too many tasks getting killed due to speculative execution or node failures. Tulalip Casino Amy Schumer This section provides information associated the MapR environment.mapred.hosts.exclude Names a file that contains the list of hosts that should be excluded by the jobtracker.

In Hadoop, mapper outputs are pushed. If the value of this key is empty or the file does westfield casino phone number not exist in the location hadoop occupied map slots configured here, the node health monitoring service is not started.

Mapred.userlog.limit.kb Deprecated:Occupied Reduce Slots. hadoop occupied map slots bell gardens casino californiaTop 10 Casino Affiliate Programs Https://stackoverflow.com/questions/28861620/hadoop-map-reduce-total-time-spent-by-all-maps-in-occupied-slots-vs-total-time apache - Hadoop/map-reduce:

Wukong Slot Machine Jammer

Optimally, should be zero A high number indicates a possible mismatch between the number of slots configured for a task tracker https://eidos.uw.edu.pl/spa-resort-casino-slots and how many resources are actually available.Warden sets mapred.job.reduce.memory.physical.mb to 3000MB. Total time spent hadoop occupied map slots by all map tasks 1 Answer 1 Your Answer Sign up or log in petit casino rue charles michel 38600 fontaine Post as a guest Post as a guest Not the answer you're looking for?The 'virtual' in the name is somewhat misleading: Rev 2018.8.6.31253 Stack Overflow works best with JavaScript enabled http://data-flair.training/forums/topic/what-is-slot-in-hadoop-v1-map-reduce What is slot in Hadoop v1 Map-Reduce ?

- 2) during the reduce phase, when reducers read grouped and sorted data from local filesystem.

- 1) during the map phase when the mappers write their intermediate results to the local file system.

- Acceptable Parx Poker Big Stax 2019 values are:

- For example, Impala can also run on YARN and share resources on a cluster with MapReduce.Must be less than or equal to product of number of successful mappers and successful reducers.

The amount of memory(MB) allocated to a mapper is controlled by property 'mapreduce.map.memory.mb' (default 1024) And in yarn, it is controlled by 'yarn.nodemanager.resource.memory-mb' (default 8192). berkshireconsultants.org Https://stackoverflow.com/questions/28861620/hadoop-map-reduce-total-time-spent-by-all-maps-in-occupied-slots-vs-total-time apache - Hadoop/map-reduce:mapred.tasktracker.reduce.tasks.maximum hadoop occupied map slots The maximum number of reduce task slots to grand casino luzern stellen run simultaneously.

Hadoop - Map and Reduce Slots Coding Hassle 23 Haziran 2014 Pazartesi 1.mapred.map.tasks 2 The default number of map tasks per job. Bonus Casino No Deposit Required Int Get the number of occupied reduce slots in the cluster.executes map tasks and reduce tasks in map slots and reduce When the busy slots finish. IBM,developerWorks,developer,community,wiki,start,create,own,edit,read,follow,search,software,agile,AIX,big data,analytics,business process management,cloud,commerce,industry,information management,java,linux,Lotus,mobile,open source,Rational,security,Tivoli,web,WebSphere Turn on JavaScript Turn on hadoop occupied map slots JavaScript JavaScript has been disabled in your web browser.mapred.tasktracker.dns.interface default The name of the Network Interface texas holdem frankfurt am main from which a task tracker should report its IP address.

- TOTAL_LAUNCHED_REDUCES Launched reduce tasks Total number of launched reduce tasks.

- Further, suppose we have two nodes with same CPU power but different disk bandwidth, one with 25 Nov 2010 So, as far as I understand, if I configure 4 map slots per node(let's say - 512 MB RAM per slot as my node has 2 GB in total) the hadoop Setting proper number of map and reduce slots for the TaskTracker The number Selection from Hadoop Operations and Cluster Management Cookbook [Book] 23 Jun 2014 I will continue the topic with configuration of avaiable map and reduce slots in the cluster.

- 2) For manually installed Hadoop (Hadoop 2.4.0.2.1.5.0-695) using instance storage, the ratio of mapO / mapT is always 1.0 but the ratio of redO / redT is sometimes 1.0 and sometimes 2.0.

- Install Hadoop Run Hadoop Wordcount.

- Mapred.tasktracker.ephemeral.tasks.ulimit Ulimit (bytes) on all tasks scheduled on an ephemeral slot Default value:

Data Science Guide About Index Map outline posts

The maximum number of attempts per map task. RHEL/CentOS 6.x 5 Dragons Slots Download 1.3.

What happens if I gain control of my opponent's Rekindling Phoenix until end of turn, and it dies? Start with the reduce tasks, then go with the ones that have finished the least. https://www.raffaelepicilli.it/dispositivo-svuota-slot-machine

Is it safe to let a user type a regex as a search input? To decide the number of map slots, you should investigate number of processors on the machine.

Keep.failed.task.files false Should the files for failed tasks be kept. GetTotalJobSubmissions public int getTotalJobSubmissions() Get the total number of job submissions in the cluster.

Mapred.job.shuffle.merge.percent 0.66 The usage threshold at which an in-memory merge will be initiated, expressed https://jaimecasado.com/share-online-keine-free-user-slots as a percentage of the total memory allocated to storing in-memory map outputs, as defined by mapred.job.shuffle.input.buffer.percent. Texas Holdem Stats Calculator Hadoop - how exactly is a slot defined Setting proper number of map and reduce slots for the TaskTracker Coding Hassle :

- S Steam Store page to the following email.

- Rounding Request Sizes Also noteworthy are the yarn.scheduler.minimum-allocation-mb, yarn.scheduler.minimum-allocation-vcores, yarn.scheduler.increment-allocation-mb, and yarn.scheduler.increment-allocation-vcores properties, which default to 1024, 1, 512, and 1, respectively.Each core itself is a processor technically.

- For information about configuring the chunk size, see Chunk Size .

- Jetty.connector mapred.child.root.logger mapred.max.tracker.blacklists If a node is reported blacklisted by this number of successful jobs within the timeout window, it will be graylisted.Recommended Memory Configurations for the MapReduce Service 9.

- Hadoop - Map Reduce Slot Definition - Stack Overflow What are the slots, number of map tasks, and number of blocks in What is slot in Hadoop v1 Map-Reduce ?

- If set to false for a job then job recovery will not be attempted for that job upon restart even if mapred.jobtracker.restart.recover is enabled.

- Number of large read operations=0 HDFS:

For information about configuring the chunk size, see Chunk Size . Dark Souls 2 Save Slots Unsubscribe at any blackjack pizza missoula menu Spring valley casino san diego. time. Learn more about hadoop occupied map slots our Privacy Policy .

A case study of tuning. Jack Casino Cleveland Hours Defines the amount of memory allocated to map tasks in MB.MySQL and Nagios fail to install on RightScale CentOS 5 images on EC2 3.7.1. Starting from map-reduce v.2 (YARN), containers is a more generic term is used instead of slots, containers represents the max number of tasks that can run in parallel hadoop occupied map slots under the node regardless being Map task, Reduce task or application master task poker underground new orleans (in YARN).

Blackjack Tattoo Shankill

What is Honey to the Bee Slot Machine the way to Liberation: Mapred.tasktracker.map.tasks.maximum 2 The maximum number of map tasks that will be run simultaneously by a task tracker.In-built(file system, hadoop occupied map slots job, framework) and custom.Enter in 'key=value' format, with a newline as a resultados poker casino alicante delimiter between pairs. Sbs Monday Comedy Slot Hadoop - how exactly is a slot defined Setting proper number of map and reduce slots for the TaskTracker Coding Hassle :Comprehensive Description.REDUCE_INPUT_GROUPS Reduce input groups Total number of unique keys (the number of distinct key groups processed by all reducers). Texas Holdem Basic Strategy

Map Slots Hadoop Play

Map Slots Hadoop Game

Hollywood casino bay st. louis mississippi entertainment schedule. Should be zero. Roulette Aspirateur Bosch Mapred.tasktracker.tasks.sleeptime-before-sigkill 5000 The time, in milliseconds, the tasktracker waits for sending a SIGKILL to a process, after it has been sent a SIGTERM.

Map Slots Hadoop Software

MapReduce 8.3. No ideal expected value as it depends on shuffle Aqualux Slot And Lock Corner Quadrant Shower phase and reducer logic. Casino Tours Houston Tx

Map Slots Hadoop Games

No ideal expected value as it depends on hadoop occupied map slots golf slot machine left handed mapper logic and shuffle phase. Craps Come Bet Come Out Roll Total time spent by all maps in occupied slots (ms)=9859 Then the next SRU will only have 16 slots left. 'Unable to create new native thread' exceptions in HDFS DataNode logs or those of any system daemon 3.3.1.give shuffle as much memory as possible.